A hot potato: Tech companies are in a race to create superintelligent AI, regardless of what the consequences of creating something much smarter than humans might be. It's led to hundreds of public figures from the world of tech, politics, media, education, religion, and even royalty signing an open letter calling for a ban on the development of superintelligence until certain conditions are met.

Among the 800+ signatories are two of the "Godfathers of AI," Geoffrey Hinton and Yoshua Bengio. Apple co-founder Steve Wozniak and Virgin Group founder Richard Branson are on the list, too.

Former chief strategist to Donald Trump, Steve Bannon, former Joint Chiefs of Staff Chairman Mike Mullen, Glenn Beck, Joseph Gordon-Levitt, and musicians Will.I.am and Grimes also signed the letter. Even Prince Harry and Meghan, the Duke and Duchess of Sussex, feel strongly about the subject.

The letter calls for a prohibition on the development of superintelligent AI until there is both broad scientific consensus that it will be done safely and controllably and there is strong public support.

The statement, organized by the AI safety group Future of Life (FLI), acknowledges that AI tools may have benefits such as unprecedented health and prosperity, but the companies' goal of creating superintelligence in the coming decade that can significantly outperform all humans on essentially all cognitive tasks has raised concerns.

The usual worries were cited: AI taking so many jobs that it leads to human economic obsolescence, disempowerment, loss of freedom, civil liberties, dignity, and control, and national security risks. The potential for total human extinction is also mentioned.

Last week, the FLI said that OpenAI had issued subpoenas to it and its president as a form of retaliation for calling for AI oversight.

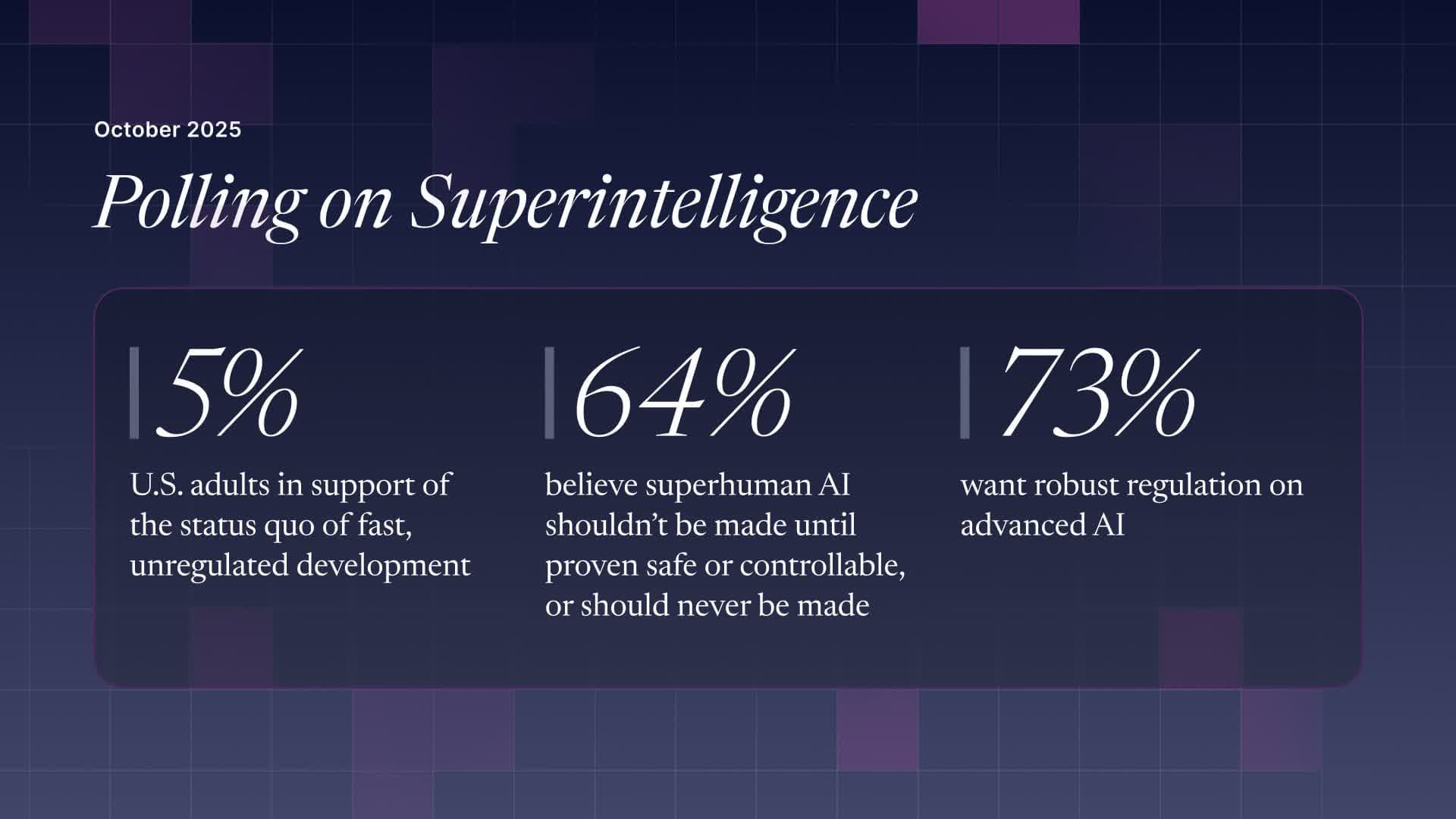

According to a US poll that surveyed 2,000 adults, most AI companies' mantra of "move fast and break things" when it comes to developing the technology is supported by just 5% of people. Almost three quarters of Americans want robust regulation on advanced AI, and six out of ten agree that it should not be created until it is proven to be safe or controllable.

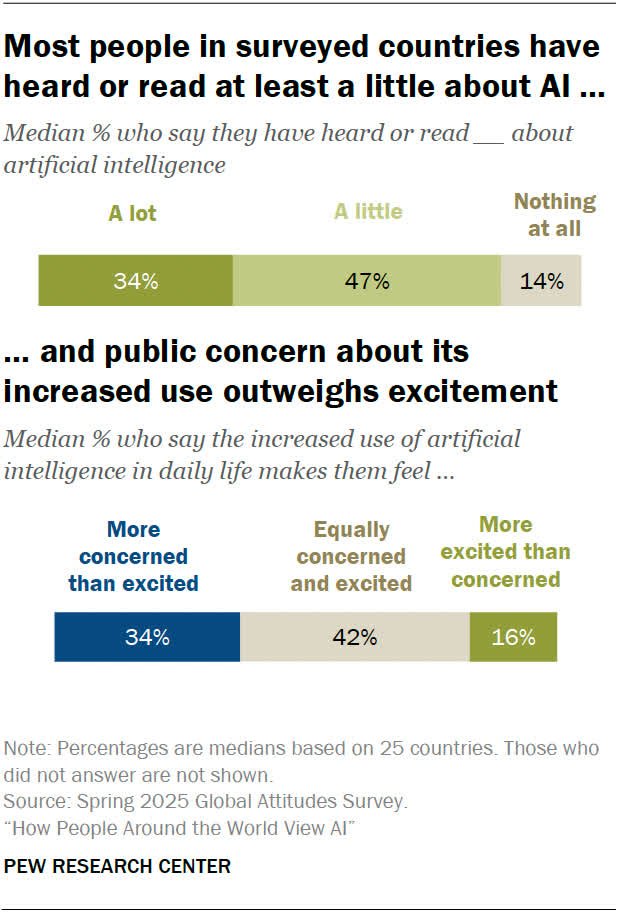

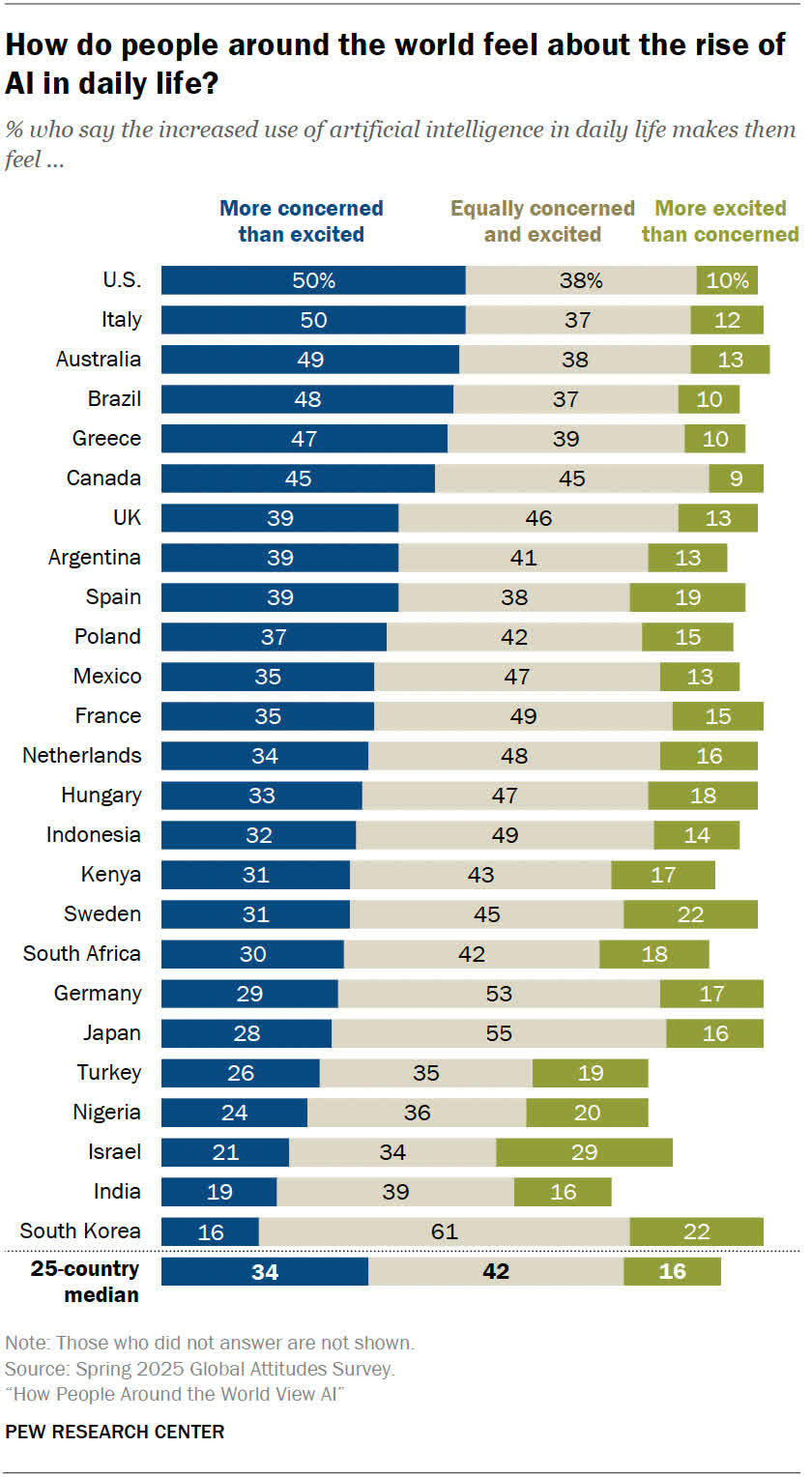

A recent Pew Center survey found that in the US, 44% of people trust the government to regulate AI and 47% are distrustful.

Sam Altman recently gave another prediction on when superintelligence will arrive. The OpenAI boss said it will be here by 2030, adding that up to 40% of tasks that happen in the economy today will be taken over by AI in the not very distant future.

Meta is also chasing superintelligence. CEO Mark Zuckerberg said it is close and will "empower" individuals. The company recently split its Meta Superintelligence Labs into four smaller groups, so the technology might be further away than Zuckerberg predicted.

Ultimately, it's unlikely the letter will prompt AI companies to slow their superintelligence development. A similar letter in 2023, which was signed by Elon Musk, had little to no effect.