Then, a year and a half ago, he started working with the Bengaluru-based startup Fasal. The company uses a combination of Internet of Things( IoT) sensors, predictive modeling, and AI-powered farm-level weather forecasts to provide farmers with tailored advice, including when to water their crops, when to apply nutrients, and when the farm is at risk of pest attacks.

Harish B. uses Fasal’s modeling to make decisions about irrigation and the application of pesticides and fertilizer. Edd Gent

Harish B. uses Fasal’s modeling to make decisions about irrigation and the application of pesticides and fertilizer. Edd Gent

Harish B. says he’s happy with the service and has significantly reduced his pesticide and water use. The predictions are far from perfect, he says, and he still relies on his farmer’s intuition if the advice doesn’t seem to stack up. But he says that the technology is paying for itself.

“Before, with our old method, we were using more water,” he says. “Now it’s more accurate, and we only use as much as we need.” He estimates that the farm is using 30 percent less water than before he started with Fasal.

Indian farmers who are looking to update their approach have an increasing number of options, thanks to the country’s burgeoning “agritech” sector. A host of startups are using AI and other digital technologies to provide bespoke farming advice and improve rural supply chains.

And the Indian government is all in: In 2018, the national government has declared agriculture to be one of the focus areas of its AI strategy, and it just announced roughly US $300 million in funding for digital agriculture projects. With considerable government support and India’s depth of technical talent, there’s hope that AI efforts will lift up the country’s massive and underdeveloped agricultural sector. India could even become a testbed for agricultural innovations that could be exported across the developing world. But experts also caution that technology is not a panacea, and say that without careful consideration, the disruptive forces of innovation could harm farmers as much as they help.

How AI is helping India’s small farms

India is still a deeply agrarian society, with roughly 65 percent of the population involved in agriculture. Thanks to the “green revolution” of the 1960s and 1970s, when new crop varieties, fertilizers, and pesticides boosted yields, the country has long been self-sufficient when it comes to food—an impressive feat for a country of 1.4 billion people. It also exports more than $40 billion worth of foodstuffs annually. But for all its successes, the agricultural sector is also extremely inefficient.

Roughly 80 percent of India’s farms are small holdings of less than 2 hectares (about 5 acres), which makes it hard for those farmers to generate enough revenue to invest in equipment and services. Supply chains that move food from growers to market are also disorganized and reliant on middlemen, a situation that eats into farmers’ profits and leads to considerable wastage. These farmers have trouble accessing credit because of the small size of their farms and the lack of financial records, and so they’re often at the mercy of loan sharks. Farmer indebtedness has reached worrying proportions: More than half of rural households are in debt, with an average outstanding amount of nearly $900 (the equivalent of more than half a year’s income). Researchers have identified debt as the leading factor behind an epidemic of farmer suicides in India. In the state of Maharashtra, which leads the country in farmer suicides, 2,851 farmers committed suicide in 2023.

While technology won’t be a cure-all for these complex social problems, Ananda Verma, founder of Fasal, says there are many ways it can make farmers’ lives a little easier. His company sells IoT devices that collect data on crucial parameters including soil moisture, rainfall, atmospheric pressure, wind speed, and humidity.

This data is passed to Fasal’s cloud servers, where it’s fed into machine learning models, along with weather data from third parties, to produce predictions about a farm’s local microclimate. Those results are input into custom-built agronomic models that can predict things like a crop’s water requirements, nutrient uptake, and susceptibility to pests and disease.

“What is being done in India is sort of a testbed for most of the emerging economies.” —Abhay Pareek, Centre for the Fourth Industrial Revolution

The output of these models is used to advise the farmer on when to water or when to apply fertilizer or pesticides. Typically, farmers make these decisions based on intuition or a calendar, says Verma. But this can lead to unnecessary application of chemicals or overwatering, which increases costs and reduces the quality of the crop. “[Our technology] helps the farmer make very precise and accurate decisions, completely removing any kind of guesswork,” he says.

Fasal’s ability to provide these services has been facilitated by a rapid expansion of digital infrastructure in India, in particular countrywide 4G coverage with rock-bottom data prices. The number of smartphone users has jumped from less than 200 million a decade ago to over a billion today. “We are able to deploy these devices in rural corners of India where sometimes you don’t even find roads, but there is still Internet,” says Verma.

Reducing water and chemical use on farms can also ease pressure on the environment. An independent audit found that across the roughly 80,000 hectares where Fasal is currently operating, it has helped save 82 billion liters of water. The company has also reduced the amount of electricity farmers must use to power water pumps, saving 54,000 tonnes of greenhouse gas emissions, and it cut farmers’ use of chemicals by 127 tonnes.

Problems with access and trust

However, getting these capabilities into the hands of more farmers will be tricky. Harish B. says some smaller farmers in his area have shown interest in the technology, but they can’t afford it (neither the farmers nor the company would disclose the product’s price). Taking full advantage of Fasal’s advice also requires investment in other equipment like automated irrigation, putting the solution even further out of reach.

Verma says farming cooperatives could provide a solution. Known as farmer producer organizations, or FPOs, they provide a legal structure for groups of small farmers to pool their resources, boosting their ability to negotiate with suppliers and customers and invest in equipment and services. In reality, though, it can be hard to set up and run an FPO. Harish B. says some of his neighbors attempted to create an FPO, but they struggled to agree on what to do, and it was ultimately abandoned.

Cropin’s technology combines satellite imagery with weather data to provide customized advice. Cropin

Cropin’s technology combines satellite imagery with weather data to provide customized advice. Cropin

Other agritech companies are looking higher up the food chain for customers. Bengaluru-based Cropin provides precision agriculture services based on AI-powered analyses of satellite imagery and weather patterns. Farmers can use the company’s app to outline the boundaries of their plot simply by walking around with their smartphone’s GPS enabled. Cropin then downloads satellite data for those coordinates and combines it with climate data to provide irrigation advice and pest advisories. Other insights include analyses of how well different plots are growing, yield predictions, advice on the optimum time to harvest, and even suggestions on the best crops to grow.

But the company rarely sells its services directly to small farmers, admits Praveen Pankajakshan, Cropin’s chief scientist. Even more than cost, the farmer’s ability to interpret and implement the advice can be a barrier, he says. That’s why Cropin typically works with larger organizations like development agencies, local governments, or consumer-goods companies, which in turn work with networks of contract farmers. These organizations have field workers who can help farmers make sense of Cropin’s advisories.

Working with more-established intermediaries also helps solve a major problem for agritech startups: establishing trust. Farmers today are bombarded with pitches for new technology and services, says Pankajakshan, which can make them wary. “They don’t have problems in adopting technology or solutions, because often they understand that it can benefit them,” he says. “But they want to know that this has been tried out and these are not new ideas, new experiments.”

That perspective rings true to Harish C.S., who runs his family’s 24-hectare fruit farm north of Bengaluru. He’s a customer of Fasal and says the company’s services are making an appreciable difference to his bottom line. But he’s also conscious that he has the resources to experiment with new technology, a luxury that smaller farmers don’t have.

Harish C.S. says Fasal’s services are making his 24-hectare fruit farm more profitable.Edd Gent

Harish C.S. says Fasal’s services are making his 24-hectare fruit farm more profitable.Edd Gent

A bad call on what crop to plant or when to irrigate can lead to months of wasted effort, says Harish C.S., so farmers are cautious and tend to make decisions based on recommendations from trusted suppliers or fellow farmers. “People would say: ‘On what basis should I apply that information which AI gave?’” he says. “‘Is there a proof? How many years has it worked? Has it worked for any known, reputable farmer? Has he made money?’”

While he’s happy with Fasal, Harish C.S. says he relies even more on YouTube, where he watches videos from a prominent pomegranate growing expert. For him, technology’s ability to connect farmers and help them share best practices is its most powerful contribution to Indian agriculture.

Some are betting that AI could help farmers with that knowledge-sharing. The latest large language models (LLMs) provide a powerful new way to analyze and organize information, as well as the ability to interact with technology more naturally via language. That could help unlock the deep repositories of agricultural know-how shared by India’s farmers, says Rikin Gandhi, CEO of Digital Green, an international nonprofit that uses technology to help smallholders, or owners of small farms.

The nonprofit Digital Green records videos about farmers’ solutions to their problems and shows them in villages. Digital Green

The nonprofit Digital Green records videos about farmers’ solutions to their problems and shows them in villages. Digital Green

Since 2008, the organization has been getting Indian farmers to record short videos explaining problems they faced and their solutions. A network of workers then tours rural villages putting on screenings. A study carried out by researchers at MIT’s Poverty Action Lab found that the program reduces the cost of getting farmers to adopt new practices from roughly $35 (when workers traveled to villages and met with individual farmers) to $3.50.

But the organization’s operations were severely curtailed during the COVID-19 pandemic, prompting Digital Green to experiment with simple WhatsApp bots that direct farmers to relevant videos in a database. Two years ago, it began training LLMs on transcripts of the videos to create a more sophisticated chatbot that can provide tailored responses.

Crucially, the chatbot can also incorporate personalized information, such as the user’s location, local weather, and market data. “Farmers don’t want to just get the generic Wikipedia, ChatGPT kind of answer,” Gandhi says. “They want very location-, time-specific advice.”

Two years ago, Digital Green began working on a chatbot trained on the organization’s videos about farming solutions. Digital Green

Two years ago, Digital Green began working on a chatbot trained on the organization’s videos about farming solutions. Digital Green

But simply providing farmers with advice through an app, no matter how smart it is, has its limits. “Information is not the only thing people are looking for,” says Gandhi. “They’re looking for ways that information can be connected to markets and products and services.”

So for the time being, Digital Green is still relying on workers to help farmers use the chatbot. Based on the organization’s own assessments, Gandhi thinks the new service could cut the cost of adopting new practices by another order of magnitude, to just 35 cents.

The downsides of AI for agritech

Not everyone is sold on AI’s potential to help farmers. In a 2022 paper, ecological anthropologist Glenn Stone argued that the penetration of big data technologies into agriculture in the global south could hold risks for farmers. Stone, a scholar in residence at Washington and Lee University, in Virginia, draws parallels between surveillance capitalism, which uses data collected about Internet users to manipulate their behavior, and what he calls surveillance agriculture, which he defines as data-based digital technologies that take decision-making away from the farmer.

The main concern is that these kinds of tools could erode the autonomy of farmers and steer their decision-making in ways that may not always help. What’s more, Stone says, the technology could interfere with existing knowledge-sharing networks. “There is a very real danger that local processes of agricultural learning, or ‘skilling,’ which are always partly social, will be disrupted and weakened when decision-making is appropriated by algorithms or AI,” he says.

Another concern, says Nandini Chami, deputy director of the advocacy group IT for Change, is who’s using the AI tools. She notes that big Indian agritech companies such as Ninjacart, DeHaat, and Crofarm are focused on using data and digital technologies to optimize rural supply chains. On the face of it, that’s a good thing: Roughly 10 percent of fruits and vegetables are wasted after harvest, and farmers’ profits are often eaten up by middlemen.

But efforts to boost efficiencies and bring economies of scale to agriculture tend to primarily benefit larger farms or agribusiness, says Chami, often leaving smallholders behind. Both in India and elsewhere, this is driving a structural shift in the economy as rural jobs dry up and people move to the cities in search of work. “A lot of small farmers are getting pushed out of agriculture into other occupations,” she says. “But we don’t have enough high-quality jobs to absorb them.”

Can AI revamp rural supply chains?

AI proponents say that with careful design, many of these same technologies can be used to help smaller farmers too. Purushottam Kaushik, head of the World Economic Forum’s Centre for the Fourth Industrial Revolution (C4IR), in Mumbai, is leading a pilot project that’s using AI and other digital technologies to streamline agricultural supply chains. It is already boosting the earnings of 7,000 chili farmers in the Khammam district in the state of Telangana.

In the state of Telangana, AI-powered crop quality assessments have boosted farmers’ profits. Digital Green

In the state of Telangana, AI-powered crop quality assessments have boosted farmers’ profits. Digital Green

Launched in 2020 in collaboration with the state government, the project combined advice from Digital Green’s first-generation WhatsApp bot with AI-powered soil testing, AI-powered crop quality assessments, and a digital marketplace to connect farmers directly to buyers. Over 18 months, the project helped farmers boost yields by 21 percent and selling prices by 8 percent.

One of the key lessons from the project was that even the smartest AI solutions don’t work in isolation, says Kaushik. To be effective, they must be combined with other digital technologies and carefully integrated into existing supply chains.

In particular, the project demonstrated the importance of working with the much-maligned middlemen, who are often characterized as a drain on farmers’ incomes. These local businessmen aren’t merely traders; they also provide important services such as finance and transport. Without those services, agricultural supply chains would grind to a halt, says Abhay Pareek, who leads C4IR’s agriculture efforts. “They are very intrinsic to the entire ecosystem,” he says. “You have to make sure that they are also part of the entire process.”

The program is now being expanded to 20,000 farmers in the region. While it’s still early days, Pareek says, the work could be a template for efforts to modernize agriculture around the world. With India’s huge diversity of agricultural conditions, a large proportion of smallholder farmers, a burgeoning technology sector, and significant government support, the country is the ideal laboratory for testing technologies that can be deployed across the developing world, he says. “What is being done in India is sort of a testbed for most of the emerging economies,” he adds.

Dealing with data bottlenecks

As with many AI applications, one of the biggest bottlenecks to progress is data access. Vast amounts of important agricultural information are locked up in central and state government databases. There’s a growing recognition that for AI to fulfill its potential, this data needs to be made accessible.

Telangana’s state government is leading the charge. Rama Devi Lanka, director of its emerging technologies department, has spearheaded an effort to create an agriculture data exchange. Previously, when companies came to the government to request data access, there was a torturous process of approvals. “It is not the way to grow,” says Lanka. “You cannot scale up like this.”

So, working with the World Economic Forum, her team has created a digital platform through which vetted organizations can sign up for direct access to key agricultural data sets held by the government. The platform has also been designed as a marketplace, which Lanka envisages will eventually allow anyone, from companies to universities, to share and monetize their private agricultural data sets.

India’s central government is looking to follow suit. The Ministry of Agriculture is developing a platform called Agri Stack that will create a national registry of farmers and farm plots linked to crop and soil data. This will be accessible to government agencies and approved private players, such as agritech companies, agricultural suppliers, and credit providers. The government hopes to launch the platform in early 2025.

But in the rush to bring data-driven techniques to agriculture, there’s a danger that farmers could get left behind, says IT for Change’s Chami.

Chami argues that the development of Agri Stack is driven by misplaced techno-optimism, which assumes that enabling digital innovation will inevitably lead to trickle-down benefits for farmers. But it could just as easily lead to e-commerce platforms replacing traditional networks of traders and suppliers, reducing the bargaining power of smaller farmers. Access to detailed, farm-level data without sufficient protections could also result in predatory targeting by land sharks or unscrupulous credit providers, she adds.

The Agri Stack proposal says access to individual records will require farmer consent. But details are hazy, says Chami, and it’s questionable whether India’s farmers, who are often illiterate and not very tech-savvy, could give informed consent. And the speed with which the program is being implemented leaves little time to work through these complicated problems.

“[Governments] are looking for easy solutions,” she says. “You’re not able to provide these quick fixes if you complicate the question by thinking about group rights, group privacy, and farmer interests.”

The people’s agritech

Some promising experiments are taking a more democratic approach. The Bengaluru-based nonprofit Vrutti is developing a digital platform that enables different actors in the agricultural supply chain to interact, collect and share data, and buy and sell goods. The key difference is that this platform is co-owned by its users, so they have a say in its design and principles, says Prerak Shah, who is leading its development.

Vrutti’s platform is primarily being used as a marketplace that allows FPOs to sell their produce to buyers. Each farmer’s transaction history is connected to a unique ID, and they can also record what crops they’re growing and what farming practices they’re using on their land. This data may ultimately become a valuable resource—for example, it could help members get lines of credit. Farmers control who can access their records, which are stored in a data wallet that they can transfer to other platforms.

Whether the private sector can be persuaded to adopt these more farmer-centric approaches remains to be seen. But India has a rich history of agricultural cooperatives and bottom-up social organizing, says Chami. That’s why she thinks that the country can be a proving ground not only for innovative new agricultural technologies, but also for more equitable ways of deploying them. “I think India will show the world how this contest between corporate-led agritech and the people’s agritech plays out,” she says.

This article appears in the February 2025 print issue as “AI’s Green Thumb.”

From Your Site Articles

Related Articles Around the Web

Many of today’s video generators now provide a tag with each generated clip, which can be added to the next prompt to improve continuity.

Intelliflicks Studios

Many of today’s video generators now provide a tag with each generated clip, which can be added to the next prompt to improve continuity.

Intelliflicks Studios

The AI-generated movie is set both in the modern world and the 19th century. Intelliflicks Studios

The AI-generated movie is set both in the modern world and the 19th century. Intelliflicks Studios

The SpiNNaker team assembles a million-core neuromorphic system.SpiNNaker

The SpiNNaker team assembles a million-core neuromorphic system.SpiNNaker A member of the SpiNNaker team checks on the company’s million-core machine.Steve Furber

A member of the SpiNNaker team checks on the company’s million-core machine.Steve Furber

Qualcomm Technologies

Qualcomm Technologies Qualcomm Technologies

Qualcomm Technologies The growing sophistication of stealth-busting tech casts doubt on continued investment in advanced submarines, each of which costs over $4 billion. Shown here are segments of a sub’s hull. Christopher Payne/Esto

The growing sophistication of stealth-busting tech casts doubt on continued investment in advanced submarines, each of which costs over $4 billion. Shown here are segments of a sub’s hull. Christopher Payne/Esto

Australia will base its AUKUS submarines at HMAS Stirling, a naval base near Perth. But the U.S. Navy would prefer to base the submarines in Guam, because it’s closer to China’s naval base on Hainan Island.

Australia will base its AUKUS submarines at HMAS Stirling, a naval base near Perth. But the U.S. Navy would prefer to base the submarines in Guam, because it’s closer to China’s naval base on Hainan Island.

The USS

The USS

The researchers tested the new technique (bottom), which generates both visual and verbal thoughts, against one that reasons only in text (middle) and one that skips reasoning and jumps straight to the answer.

The researchers tested the new technique (bottom), which generates both visual and verbal thoughts, against one that reasons only in text (middle) and one that skips reasoning and jumps straight to the answer.

Amazon

Amazon

Amazon

Amazon

Amazon

Amazon

The HyQReal hydraulic quadruped is tethered for power during experiments at IIT, but it can also run on battery power.IIT

The HyQReal hydraulic quadruped is tethered for power during experiments at IIT, but it can also run on battery power.IIT HyQReal’s telepresence control system offers haptic feedback and a large workspace.IIT

HyQReal’s telepresence control system offers haptic feedback and a large workspace.IIT Harish B. uses Fasal’s modeling to make decisions about irrigation and the application of

Harish B. uses Fasal’s modeling to make decisions about irrigation and the application of  Cropin’s technology combines satellite imagery with weather data to provide customized advice. Cropin

Cropin’s technology combines satellite imagery with weather data to provide customized advice. Cropin  Harish C.S. says Fasal’s services are making his 24-hectare fruit farm more profitable.Edd Gent

Harish C.S. says Fasal’s services are making his 24-hectare fruit farm more profitable.Edd Gent  The nonprofit Digital Green records videos about farmers’ solutions to their problems and shows them in villages. Digital Green

The nonprofit Digital Green records videos about farmers’ solutions to their problems and shows them in villages. Digital Green  Two years ago, Digital Green began working on a chatbot trained on the organization’s videos about farming solutions. Digital Green

Two years ago, Digital Green began working on a chatbot trained on the organization’s videos about farming solutions. Digital Green  In the state of Telangana, AI-powered crop quality assessments have boosted farmers’ profits. Digital Green

In the state of Telangana, AI-powered crop quality assessments have boosted farmers’ profits. Digital Green

Mined pegmatite is later processed to extract rubidium and lithium from the mineral. Everest Metals

Mined pegmatite is later processed to extract rubidium and lithium from the mineral. Everest Metals Chinmay Hegde, an Associate Professor of Computer Science and Engineering and Electrical and Computer Engineering at

Chinmay Hegde, an Associate Professor of Computer Science and Engineering and Electrical and Computer Engineering at

Chinmay Hegde, an Associate Professor of Computer Science and Engineering and Electrical and Computer Engineering at

Chinmay Hegde, an Associate Professor of Computer Science and Engineering and Electrical and Computer Engineering at  Challenge frame of original and

Challenge frame of original and

The Rivian R1S’s touchscreen dashboard comes with plenty of bells and whistles.Rivian

The Rivian R1S’s touchscreen dashboard comes with plenty of bells and whistles.Rivian

Christian Gralingen

Christian Gralingen

UNSW Sydney has more than 300 researchers applying AI across various critical fields such as

UNSW Sydney has more than 300 researchers applying AI across various critical fields such as  This pocket-size, chip-based optical gyroscope from Anello Photonics is just as accurate as bulkier versions.Anello Photonics

This pocket-size, chip-based optical gyroscope from Anello Photonics is just as accurate as bulkier versions.Anello Photonics

The Shopper Transparency Tool used optical character recognition to parse workers’ screenshots and find the relevant information (A). The data from each worker was stored and analyzed (B), and workers could interact with the tool by sending various commands to learn more about their pay (C). Dana Calacci

The Shopper Transparency Tool used optical character recognition to parse workers’ screenshots and find the relevant information (A). The data from each worker was stored and analyzed (B), and workers could interact with the tool by sending various commands to learn more about their pay (C). Dana Calacci

Throughout the worker protests, Shipt said only that it had updated its pay algorithm to better match payments to the labor required for jobs; it wouldn’t provide detailed information about the new algorithm. Its corporate photographs present idealized versions of happy Shipt shoppers. Shipt

Throughout the worker protests, Shipt said only that it had updated its pay algorithm to better match payments to the labor required for jobs; it wouldn’t provide detailed information about the new algorithm. Its corporate photographs present idealized versions of happy Shipt shoppers. Shipt

Shipt workers protested in front of the headquarters of Target (which owns Shipt) in October 2020. They demanded the company’s return to a pay algorithm that paid workers based on a simple and transparent formula. The SHIpT List

Shipt workers protested in front of the headquarters of Target (which owns Shipt) in October 2020. They demanded the company’s return to a pay algorithm that paid workers based on a simple and transparent formula. The SHIpT List

Willy Solis spearheaded the effort to determine how Shipt had changed its pay algorithm by organizing his fellow Shipt workers to send in data about their pay—first directly to him, and later using a textbot.Willy Solis

Willy Solis spearheaded the effort to determine how Shipt had changed its pay algorithm by organizing his fellow Shipt workers to send in data about their pay—first directly to him, and later using a textbot.Willy Solis

ProtGPS uses a machine-learning framework to predict protein localization within condensate compartments.

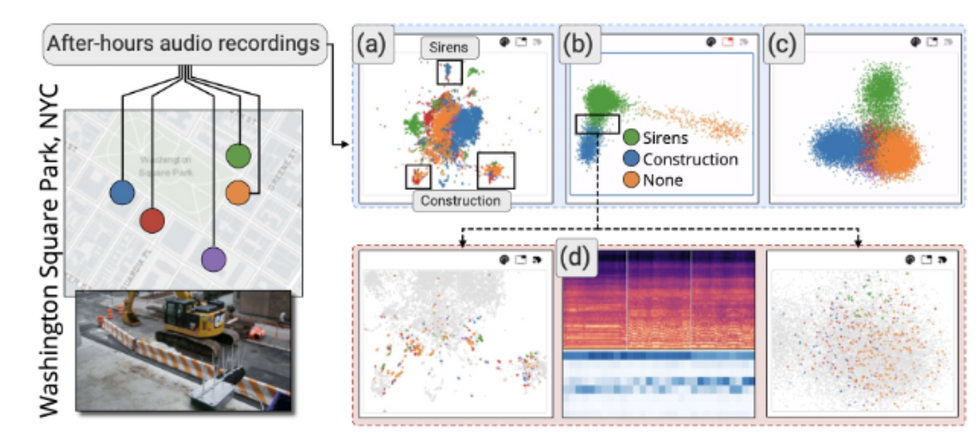

ProtGPS uses a machine-learning framework to predict protein localization within condensate compartments. Claudio Silva is the co-director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of computer science and engineering at the NYU Tandon School of Engineering and NYU Center for Data Science.NYU Tandon

Claudio Silva is the co-director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of computer science and engineering at the NYU Tandon School of Engineering and NYU Center for Data Science.NYU Tandon

Claudio Silva is the co-director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of computer science and engineering at the NYU Tandon School of Engineering and NYU Center for Data Science.NYU Tandon

Claudio Silva is the co-director of the Visualization Imaging and Data Analytics (VIDA) Center and professor of computer science and engineering at the NYU Tandon School of Engineering and NYU Center for Data Science.NYU Tandon

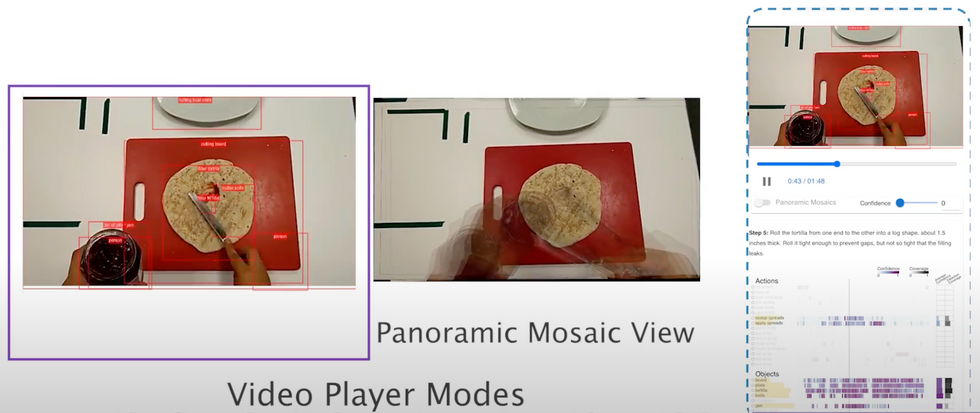

In building the technology, Silva’s team turned to a specific task that required a lot of visual analysis, and could benefit from a checklist based system: cooking.

NYU Tandon

In building the technology, Silva’s team turned to a specific task that required a lot of visual analysis, and could benefit from a checklist based system: cooking.

NYU Tandon

ARGUS, the interactive visual analytics tool, allows for real-time monitoring and debugging while an AR system is in use. It lets developers see what the AR system sees and how it’s interpreting the environment and user actions. They can also adjust settings and record data for later analysis.NYU Tandon

ARGUS, the interactive visual analytics tool, allows for real-time monitoring and debugging while an AR system is in use. It lets developers see what the AR system sees and how it’s interpreting the environment and user actions. They can also adjust settings and record data for later analysis.NYU Tandon

MP Materials’ has named its new, state-of-the-art magnet manufacturing facility Independence.Business Wire

MP Materials’ has named its new, state-of-the-art magnet manufacturing facility Independence.Business Wire The

The

Boom says its Symphony turbofan engine will be quieter than Concorde’s deafening afterburners were.

Boom says its Symphony turbofan engine will be quieter than Concorde’s deafening afterburners were. Boom’s supersonic jets won’t rely on a droop-nose design like the Concorde had.Boom Supersonic

Boom’s supersonic jets won’t rely on a droop-nose design like the Concorde had.Boom Supersonic