はじめに

CRIランタイムとしてcontainerdを使用するk8sのインストールを初めて実施するので、作業ログとして本記事を残します。

環境

- ベアメタル

- ubutnu 20.04.2

- プロキシ環境

インストール対象

- containerd v1.3.3

- kubernetes v1.20.4

- Calico v3.18.0

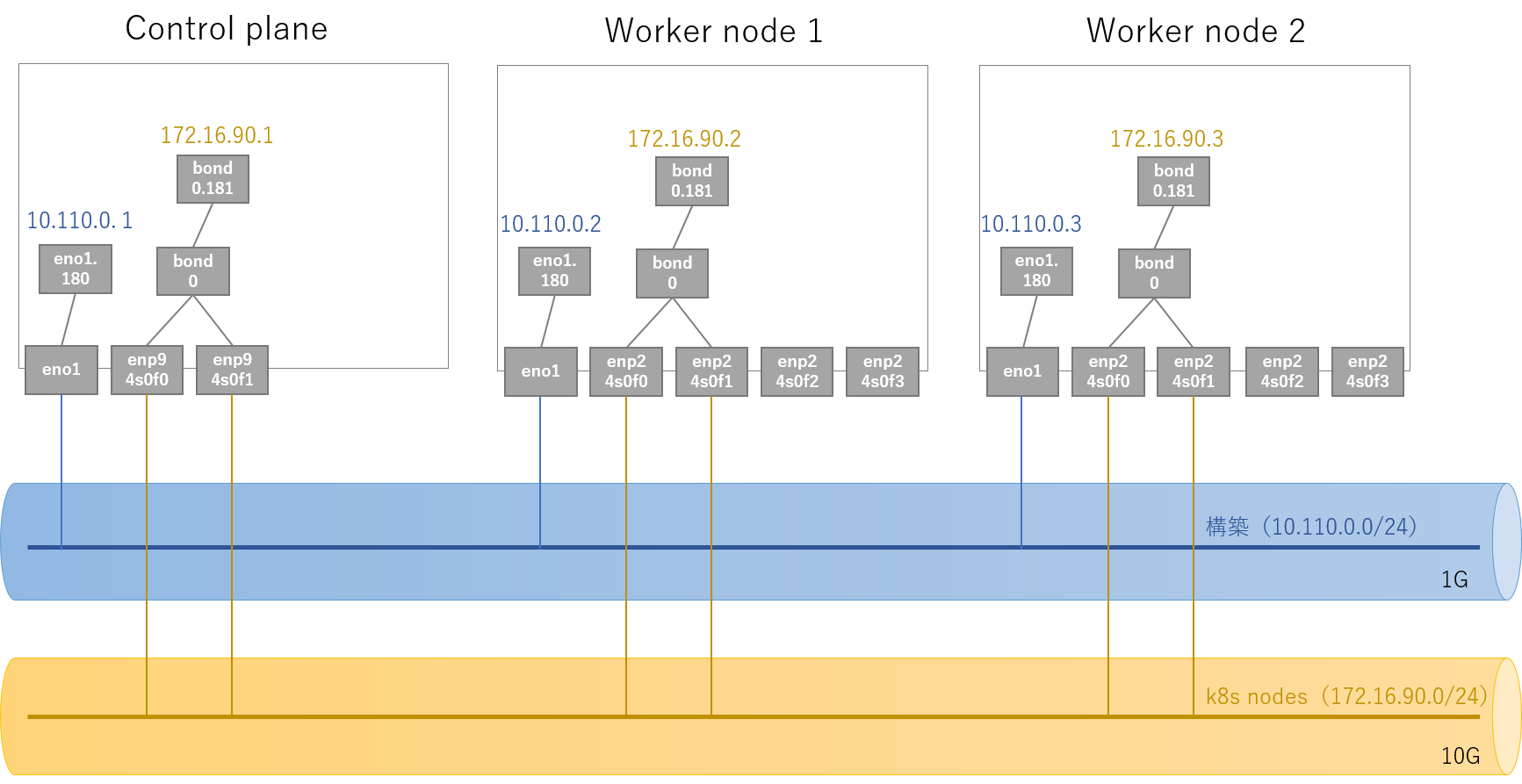

サーバー構成

作業ログ

control planeのインストール

containerdのインストール

k8sのドキュメントに従って実施する。

Install and configure prerequisites:

controlplane:~$ cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

> overlay

> br_netfilter

> EOF

overlay

br_netfilter

controlplane:~$ sudo modprobe overlay

controlplane:~$ sudo modprobe br_netfilter

controlplane:~$ cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

> net.bridge.bridge-nf-call-iptables = 1

> net.ipv4.ip_forward = 1

> net.bridge.bridge-nf-call-ip6tables = 1

> EOF

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

controlplane:~$ sudo sysctl --system

* Applying /etc/sysctl.d/10-console-messages.conf ...

kernel.printk = 4 4 1 7

* Applying /etc/sysctl.d/10-ipv6-privacy.conf ...

net.ipv6.conf.all.use_tempaddr = 2

net.ipv6.conf.default.use_tempaddr = 2

* Applying /etc/sysctl.d/10-kernel-hardening.conf ...

kernel.kptr_restrict = 1

* Applying /etc/sysctl.d/10-link-restrictions.conf ...

fs.protected_hardlinks = 1

fs.protected_symlinks = 1

* Applying /etc/sysctl.d/10-magic-sysrq.conf ...

kernel.sysrq = 176

* Applying /etc/sysctl.d/10-network-security.conf ...

net.ipv4.conf.default.rp_filter = 2

net.ipv4.conf.all.rp_filter = 2

* Applying /etc/sysctl.d/10-ptrace.conf ...

kernel.yama.ptrace_scope = 1

* Applying /etc/sysctl.d/10-zeropage.conf ...

vm.mmap_min_addr = 65536

* Applying /usr/lib/sysctl.d/50-default.conf ...

net.ipv4.conf.default.promote_secondaries = 1

sysctl: setting key "net.ipv4.conf.all.promote_secondaries": Invalid argument

net.ipv4.ping_group_range = 0 2147483647

net.core.default_qdisc = fq_codel

fs.protected_regular = 1

fs.protected_fifos = 1

* Applying /usr/lib/sysctl.d/50-pid-max.conf ...

kernel.pid_max = 4194304

* Applying /etc/sysctl.d/99-kubernetes-cri.conf ...

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

* Applying /etc/sysctl.d/99-sysctl.conf ...

* Applying /usr/lib/sysctl.d/protect-links.conf ...

fs.protected_fifos = 1

fs.protected_hardlinks = 1

fs.protected_regular = 2

fs.protected_symlinks = 1

* Applying /etc/sysctl.conf ...

aptのプロキシ設定

controlplane:~$ export http_proxy=http://<proxy-host>:<proxy-port>/

controlplane:~$ cat <<EOF | sudo tee /etc/apt/apt.conf.d/90proxy

> Acquire::http::Proxy "${http_proxy}";

> Acquire::https::Proxy "${http_proxy}";

> EOF

... snip ...

Install containerd:

controlplane:~$ sudo apt-get update && sudo apt-get install -y containerd

(中略)

controlplane:~$ sudo mkdir -p /etc/containerd

controlplane:~$ containerd config default | sudo tee /etc/containerd/config.toml

(中略)

containerdのプロキシ設定

controlplane:~$ export no_proxy=10.0.0.0/8

controlplane:~$ sudo mkdir /etc/systemd/system/containerd.service.d

controlplane:~$ cat <<EOF | sudo tee /etc/systemd/system/containerd.service.d/http-proxy.conf

> [Service]

> Environment="HTTP_PROXY=${http_proxy}" "HTTPS_PROXY=${http_proxy}" "NO_PROXY=${no_proxy}"

> EOF

[Service]

Environment="HTTP_PROXY=http://133.162.11.160:63128/" "HTTPS_PROXY=http://133.162.11.160:63128/" "NO_PROXY=10.0.0.0/8"

controlplane:~$ sudo systemctl daemon-reload

controlplane:~$ sudo systemctl restart containerd.service

controlplane:~$ unset http_proxy

controlplane:~$ unset no_proxy

CRIランタイムとkubeletのcgroup driverにsystemdを指定するのが推奨のようである。

https://kubernetes.io/docs/setup/production-environment/container-runtimes/#cgroup-drivers

しかし、kubeletにcgroup driverを指定するとkubadm initに失敗する問題に遭遇したため、今回はcontanerdのデフォルト(cgroupfs)のまま使用する。

イメージのpullができるかを確認するために、crictlをインストール。

controlplane:~$ VERSION="v1.20.0"

controlplane:~$ wget https://github.com/kubernetes-sigs/cri-tools/releases/download/$VERSION/crictl-$VERSION-linux-amd64.tar.gz

(中略)

controlplane:~$ sudo tar zxvf crictl-$VERSION-linux-amd64.tar.gz -C /usr/local/bin

crictl

controlplane:~$ rm -f crictl-$VERSION-linux-amd64.tar.gz

crictlの定義の作成

controlplane:~$ cat <<EOF | sudo tee /etc/crictl.yaml

> runtime-endpoint: unix:///run/containerd/containerd.sock

> image-endpoint: unix:///run/containerd/containerd.sock

> EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

controlplane:~$ sudo crictl version

Version: 0.1.0

RuntimeName: containerd

RuntimeVersion: 1.3.3-0ubuntu2.2

RuntimeApiVersion: v1alpha2

コンテナイメージがpullできるかを確認。

controlplane:~$ sudo crictl pull hello-world

Image is up to date for sha256:bf756fb1ae65adf866bd8c456593cd24beb6a0a061dedf42b26a993176745f6b

controlplane:~$ sudo crictl start hello-world

FATA[0000] starting the container "hello-world": rpc error: code = NotFound desc = an error occurred when try to find container "hello-world": not found

controlplane:~$ sudo crictl image ls

IMAGE TAG IMAGE ID SIZE

docker.io/library/hello-world latest bf756fb1ae65a 6.67kB

controlplane:~$ sudo crictl rmi bf756fb1ae65a

Deleted: docker.io/library/hello-world:latest

イメージのpullができたので、プロキシ設定がうまくできているようである。

kubernetesのインストール

k8sドキュメントの手順に従って、実施します。

swapの無効化

controlplane:~$ free -m

total used free shared buff/cache available

Mem: 385401 1067 383079 2 1253 382452

Swap: 8191 0 8191

controlplane:~$ sudo swapoff /swap.img

controlplane:~$ free -m

total used free shared buff/cache available

Mem: 385401 1068 383077 2 1255 382451

Swap: 0 0 0

controlplane:~$ sudo vim /etc/fstab

controlplane:~$ cat /etc/fstab

# /etc/fstab: static file system information.

#

# Use 'blkid' to print the universally unique identifier for a

# device; this may be used with UUID= as a more robust way to name devices

# that works even if disks are added and removed. See fstab(5).

#

# <file system> <mount point> <type> <options> <dump> <pass>

# / was on /dev/sda2 during curtin installation

/dev/disk/by-uuid/1d472118-9c36-44a3-b159-46d4ae5c4ced / ext4 defaults 0 0

# /boot/efi was on /dev/sda1 during curtin installation

/dev/disk/by-uuid/E0B4-C69E /boot/efi vfat defaults 0 0

#/swap.img none swap sw 0 0

iptablesがnftablesバックエンドを使用しないようにする。

controlplane:~$ sudo apt-get install -y iptables arptables ebtables

(中略)

controlplane:~$ sudo update-alternatives --set iptables /usr/sbin/iptables-legacy

controlplane:~$ sudo update-alternatives --set ip6tables /usr/sbin/ip6tables-legacy

controlplane:~$ sudo update-alternatives --set arptables /usr/sbin/arptables-legacy

update-alternatives: using /usr/sbin/arptables-legacy to provide /usr/sbin/arptables (arptables) in manual mode

controlplane:~$ sudo update-alternatives --set ebtables /usr/sbin/ebtables-legacy

update-alternatives: using /usr/sbin/ebtables-legacy to provide /usr/sbin/ebtables (ebtables) in manual mode

Installing kubeadm, kubelet and kubectl

controlplane:~$ sudo apt-get update && sudo apt-get install -y apt-transport-https curl

(中略)

controlplane:~$ env https_proxy=http://<proxy-host>:<proxy-port>/ curl -s https://packages.cloud.google.com/apt/doc/apt-key.gpg | sudo apt-key add -

OK

controlplane:~$ cat <<EOF | sudo tee /etc/apt/sources.list.d/kubernetes.list

> deb https://apt.kubernetes.io/ kubernetes-xenial main

> EOF

deb https://apt.kubernetes.io/ kubernetes-xenial main

controlplane:~$ sudo apt-get update

(中略)

controlplane:~$ sudo apt-get install -y kubelet kubeadm kubectl

(中略)

controlplane:~$ sudo apt-mark hold kubelet kubeadm kubectl

kubelet set on hold.

kubeadm set on hold.

kubectl set on hold.

ノードにIPアドレスが複数ある場合は、あらかじめ変数ファイルへの指定が必要。

controlplane:~$ cat <<EOF | sudo tee /etc/default/kubelet

> KUBELET_EXTRA_ARGS=--node-ip=172.16.90.1

> EOF

KUBELET_EXTRA_ARGS=--node-ip=172.16.90.1

Initializing your control-plane node

controlplane:~$ sudo kubeadm init \

> --cri-socket=/run/containerd/containerd.sock \

> --apiserver-advertise-address=172.16.90.1 \

> --pod-network-cidr=192.168.0.0/16 \

> --dry-run

(中略)

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/tmp/kubeadm-init-dryrun952978795/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.90.1:6443 --token rk8u51.4wlm72el3vobkigm \

--discovery-token-ca-cert-hash sha256:9a8c3f956fd8431ec637f04557479dcf3f28d4c49c359b17211f9617f2815490

controlplane:~$ sudo kubeadm init \

> --cri-socket=/run/containerd/containerd.sock \

> --control-plane-endpoint 172.16.90.1 \

> --apiserver-advertise-address=172.16.90.1 \

> --apiserver-cert-extra-sans=133.162.11.171 \

> --pod-network-cidr=192.168.0.0/16

(中略)

Your Kubernetes control-plane has initialized successfully!

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 172.16.90.1:6443 --token n2nf5j.c6l9ehlwv4vcifn9 \

--discovery-token-ca-cert-hash sha256:a27b01a8428d526f5d727f13952f591003d994af33f1826006558d24f2da147c

ノードの確認

controlplane:~$ mkdir -p $HOME/.kube

controlplane:~$ sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

controlplane:~$ sudo chown $(id -u):$(id -g) $HOME/.kube/config

controlplane:~$ unset KUBECONFIG

controlplane:~$ k get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane NotReady control-plane,master 47s v1.20.4 172.16.90.1 <none> Ubuntu 20.04.2 LTS 5.4.0-65-generic containerd://1.3.3-0ubuntu2.2

指定したIPアドレスがINTERNAL-IPに設定されていることが確認できた。

Calicoのインストール

controlplane:~$ kubectl apply -f https://docs.projectcalico.org/manifests/tigera-operator.yaml

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created

namespace/tigera-operator created

podsecuritypolicy.policy/tigera-operator created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

deployment.apps/tigera-operator created

controlplane:~$ kubectl apply -f https://docs.projectcalico.org/manifests/custom-resources.yaml

installation.operator.tigera.io/default created

controlplane:~$ kubectl get pod -A

NAMESPACE NAME READY STATUS RESTARTS AGE

calico-system calico-kube-controllers-6797d7cc89-kr645 1/1 Running 0 34s

calico-system calico-node-kvqbm 1/1 Running 0 34s

calico-system calico-typha-57c94df999-glcs8 1/1 Running 0 34s

kube-system coredns-74ff55c5b-fzp4f 1/1 Running 0 16m

kube-system coredns-74ff55c5b-t2rq9 1/1 Running 0 16m

kube-system etcd-controlplane 1/1 Running 0 16m

kube-system kube-apiserver-controlplane 1/1 Running 0 16m

kube-system kube-controller-manager-controlplane 1/1 Running 0 16m

kube-system kube-proxy-qtz96 1/1 Running 0 16m

kube-system kube-scheduler-controlplane 1/1 Running 0 16m

tigera-operator tigera-operator-549bf46b5c-jrzc5 1/1 Running 0 43s

controlplane:~$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

controlplane Ready control-plane,master 16m v1.20.4

Calicoがいつの間にかoperatorになっていた。

とりあえずcontrol planeのインストールが完了した!

Worker nodeのインストール

Control planeインストール手順のkubeadm init実行前までの手順をWorker nodeでも実施する。

その後、kubeadm joinコマンドでworker nodeをk8sクラスタに追加する。

wworker01:~$ sudo kubeadm join 172.16.90.1:6443 --token n2nf5j.c6l9ehlwv4vcifn9 \

> --discovery-token-ca-cert-hash sha256:a27b01a8428d526f5d727f13952f591003d994af33f1826006558d24f2da147c

[preflight] Running pre-flight checks

[preflight] Reading configuration from the cluster...

[preflight] FYI: You can look at this config file with 'kubectl -n kube-system get cm kubeadm-config -o yaml'

[kubelet-start] Writing kubelet configuration to file "/var/lib/kubelet/config.yaml"

[kubelet-start] Writing kubelet environment file with flags to file "/var/lib/kubelet/kubeadm-flags.env"

[kubelet-start] Starting the kubelet

[kubelet-start] Waiting for the kubelet to perform the TLS Bootstrap...

This node has joined the cluster:

* Certificate signing request was sent to apiserver and a response was received.

* The Kubelet was informed of the new secure connection details.

Run 'kubectl get nodes' on the control-plane to see this node join the cluster.

ノード一覧の確認

controlplane:~$ k get node -o wide

NAME STATUS ROLES AGE VERSION INTERNAL-IP EXTERNAL-IP OS-IMAGE KERNEL-VERSION CONTAINER-RUNTIME

controlplane Ready control-plane,master 48m v1.20.4 172.16.90.1 <none> Ubuntu 20.04.2 LTS 5.4.0-65-generic containerd://1.3.3-0ubuntu2.2

worker01 Ready <none> 29m v1.20.4 172.16.90.2 <none> Ubuntu 20.04.2 LTS 5.4.0-65-generic containerd://1.3.3-0ubuntu2.2

worker02 Ready <none> 4m58s v1.20.4 172.16.90.3 <none> Ubuntu 20.04.1 LTS 5.4.0-42-generic containerd://1.3.3-0ubuntu2.2

Worker nodeが追加できたので、これでk8sクラスタの構築が完了!

参考

- https://kubernetes.io/docs/setup/production-environment/container-runtimes/#containerd

- https://github.com/kubernetes-sigs/cri-tools/blob/master/docs/crictl.md#install-crictl

- https://kubernetes.io/docs/setup/production-environment/tools/kubeadm/install-kubeadm/

- https://github.com/kubernetes/kubeadm/issues/203#issuecomment-412248894

- https://docs.projectcalico.org/getting-started/kubernetes/quickstart

コメント