I’m skeptical. In particular, about this idea that the rate of global warming at Earth’s surface has recently exhibited a slowdown.

Even more extreme claims have been promoted, such as an actual “pause” or “hiatus” in warming of earth’s surface (i.e. that surface warming actually came to a halt). But those ideas are fading away, not just because we’re setting new temperature records, but also because it has finally dawned on people that actual statistical evidence for a pause just isn’t there. Never was. The longer and louder deniers continue the “pause” narrative, the more foolish they show themselves to be.

But to some people (not everybody), some of the graphs (not all of them) made it look so much like something different happened recently, that the idea of a slowdown took hold. Naturally climate scientists want to understand it — so they have searched for reasons and causes and explanations. But skepticism about the idea seems lacking. My guess is that a majority of climate scientists consider it established (it’s certainly mentioned in the IPCC reports).

On another forum I was recently part of a very brief discussion about the subject (one which mainly amounted to expressing our opinions). At least one very distinguished climate scientist stated that he considered a slowdown to be a genuine feature of the modern surface temperature record, while I stated that I didn’t see enough evidence to draw that conclusion.

This is exactly one of those subjects about which climate scientists can, and do, disagree. Not that I count myself among that august body, but in the spirit of the most convivial disagreement, with some whom I greatly respect and even like (without ever having met in person), I’d like to lay out the evidence for my claim that when it comes to claiming a slowdown, there isn’t enough evidence yet. If others can provide a cogent contradiction, then we’ll all have learned something.

So let’s get down to brass tacks.

A recent analysis by Stefan Rahmstorf with assistance from Niamh Cahill used a form of change-point analysis to look for exactly what we’re discussing: changes in the rate of surface warming. It does so by fitting piece-wise linear functions to the data, and determining how many changes from one piece to another are needed, and justifiably so, to get an “optimal” model. It demonstrated that there were no statistically significant changes in the warming rate after 1970. At least, not according to that test.

Of course that doesn’t prove there hadn’t been some change, a “slowdown” even — but there just wasn’t enough evidence to claim there had been either. One conclusion we can rely on is that the real question is this: has the warming rate changed since 1970? Let’s begin by isolating the data since then, using the global temperature data from NASA GISS:

I’ve superimposed a line showing the linear trend (by least squares), which of course has a constant warming rate. One of the difficulties we’ll face in trying to find changes of the warming rate is that the data show such strong autocorrelation. We can reduce its impact, and simplify things considerably, if we use the monthly data as shown to compute annual averages, and work with those.

Annual averages will still show autocorrelation, but it’ll be much weaker and we’ll simply ignore it. Incidentally, that will make it easier to show a change in the warming rate, so this errs on the side of confirming the trend has changed rather than stayed the same.

Here are yearly averages of the GISS data:

The two most recent (and hottest) data points are circled in red. We’ll omit the final one because it’s for 2015, which is only three months old (but off to a toasty start). We’ll also omit 2014 because, on another forum, it was suggested that we should look for a slowdown without using 2014 data. I consider that a capital mistake — but I’m gonna do it anyway. This too will therefore err on the side of confirming the trend has changed rather than stayed the same. Since I’m genuinely looking for evidence of a slowdown, I’m just going to give it every possible chance, even if that means leaving out the most recent data, consisting of the hottest year on record followed by the hottest three-month start to a year on record.

That leaves us with this (again, a linear trend superimposed):

If you want to claim a change in the trend (meaning, a change in the warming rate), then you should be able to show it in this data set.

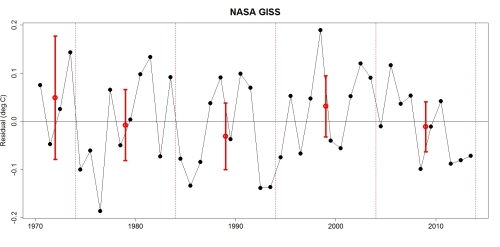

In fact, if you want to claim a change in the trend, then you need to show that there’s something significant other than just that linear trend. Here’s what’s left over after we subtract the linear trend from the data, leaving what are usually called “residuals”:

To show a change in the warming rate since 1970, you need to show there’s something in these residuals other than just noise. If you can’t do that, then I say you’ve failed to provide solid evidence of a slowdown.

I originally started trying (long ago in a galaxy far away) by fitting continuous piecewise linear functions, essentially reproducing the change point analysis of Rahmstorf and Cahill. In doing so we try all reasonable change points (did the trend change in 1998 or 2006? or 2002? or last Thursday?) and we need to take that into account. After all, if you’re aiming at a target, it’s not so impressive to hit the bulls-eye when you’re allowed a dozen tries! Traditional change-point analysis adjusts how we compute the p-value for each statistical test to compensate for our making multiple trials, often doing so by Monte Carlo simulations. I used a different (but equivalent) method, of computing p-values the single-trial way but using Monte Carlo to determine how those should be adjusted. After each change-point try, if it gave a seemingly significant result, meaning a naive p-value of 0.05 or less (the de facto standard for statistical significance), I still needed to adjust that for multiple trials. As a result, most of the apparently significant change points will turn out to be simply not so.

The real kicker is, that when I got to that final stage of adjusting for multiple trials, I didn’t have to because there weren’t any seemingly significant results to adjust. We got our dozen shots at the target and still couldn’t hit the bulls-eye. So it not only fails to demonstrate any trend in these residuals, it doesn’t even come close.

Maybe piecewise linear functions turn corners too sharply, while the changing trend has been more curved. Let’s try polynomials. That of 2nd degree (quadratic) fails statistical significance. So does a 3rd-degree (cubic) polynomial, and a 4th and 5th, and all the way up to degree 10 it still fails. No demonstrable polynomial trend in these residuals.

By the way, we tried lots of polynomial degrees so we should adjust those too for multiple trials. It’s part of the complication involved in stepwise regression. But once again we don’t have to adjust the seemingly significant results, because there aren’t any. Not only is there no demonstrable polynomial trend, it’s not even close.

Maybe it’s some different kind of trend change altogether. Maybe the last decade, the residuals have just been cooler than they tended to be in prior decades. Or maybe it was hotter. Or maybe the decade before this most recent one, is the decade that “stands out” and shows something significant, something different from the others. Can we look for that kind of change?

There is a way to look for differences between groups, a standard and strong way called ANOVA (ANalysis Of VAriance). Let’s split the residuals into 10-year segments, the most recent of which will culminate with 2013, then run the ANOVA analysis to see whether or not we can detect a difference among any of the segments (the first segment, as it turns out, will only have four years rather than 10 like the others, but that doesn’t bother ANOVA):

Result: no. With a p-value 0.45 it falls into the “not even close” category, there’s no evidence from that analysis that any of these 10-year stretches of residuals is different from any of the others. Well, maybe 5-year spans shows this? Again applying ANOVA, again it’s not even close with p-value 0332. Well, it kinda looks like the final six years (2008 through 2013) are on the low side, what does ANOVA say? It says “no.” Does my eye deceive me, or does the final 3-year span (2011 through 2013) look different? What if we split it into 3-year segments? Again, not significant, not even close.

What if we just took those last three years, when the residuals are all nearly the same and below zero, and compared them to the rest of the years 1970-2010 using a plain old t test instead of ANOVA? We have to recognize the null hypothesis is that the last three years aren’t really different from the preceding except for random fluctuation, so our t-test should use the null hypothesis of equal mean and equal variance, which calls for the equal-variance version. It says, once again, there’s no statistically significant evidence that the residuals during the 3-year span from 2011 through 2013 were any different from those which preceded it.

What if … and I think this sounds crazy … the trend has changed in a discontinuous way? What if, instead of just changing its rate (the warming rate), it also changed its value, making a sudden jump? After all, if we’re to believe what the deniers say about the trend “since year whatever” without taking into account what came before that, then we’d have to believe in a “trend” in the residuals that looked something like this:

Frankly, I think such a trend would be unphysical. But crazier things have happened, so let’s find out if this kind of model can give us solid evidence of a trend change.

So, I fit models which allowed for a sudden change in both rate and value, at some change point. I tried all possible change point times which gave at least 5 years of data on both sides. I computed the p-value for each trial, and if any of them were seemingly significant I was prepared to adjust for multiple trials. I applied the proper F test, including accounting for the fact that I had already subtracted a linear trend. The residual fit shown above gave the strongest result, and corresponds to modeling the data (not residuals) like this:

When the results are adjusted for having made so many multiple trials, this really amounts to a form of the Chow test. But, once again, statistics says this strongest model isn’t even seemingly significant so we don’t even need to compute that refinement.

One last note: just in case you think there might be a slowdown if I did include data from the year 2014, you’re mistaken. I’ve run all the same tests on that too, and still no trend change can be demonstrated.

Bottom line:

Even allowing for a rather crazy-seeming trend with discontinuity, no slowdown can be confirmed. Even cherry-picking (actually) the final 3-year span for a t-test can’t do it (I only picked a 3-year span because it looked like it might give me the result I was looking for). I only tried a t-test because the ANOVA test gave no significant result. I tried lots of other grouping intervals for ANOVA besides just 3 years and 5 years and 6 years and 10 years, without even bothering to account for multiple trials. I did the ANOVA because I couldn’t find any significant polynomial pattern, although I pushed the polynomial degree all the way up to 10. I tried polynomials because the original change point analysis found no trend change after 1970. And I did all of that after first chopping off the most recent data, consisting of the hottest year on record followed by the hottest three-month start to a year on record. I also did all of that without chopping off the most recent year’s data.

I think I gave it a fair shot. More than a reasonable chance. I still I found no reliable evidence of a slowdown.

Maybe there has been one. Really. Maybe not. Really. If you think the slowdown is real and you want to study why it happened, that’s a great idea because we’re likely to learn more. I suspect we may learn more about the fluctuations than we do about the trend — but either way we learn.

Still, don’t say there is a slowdown as though it were a known fact; when you publish your hypothesis in Geophysical Research Letters, refer to the slowdown as purported or possible. ‘Cause I’ve tried to show it a dozen ways from Sunday, but it’s just not there, and I have yet to see anybody else show it either.

Now you are just showing off!!! Good work Tamino and thanks for the analysis.

I, too, was surprised when respectable scientists started looking for pause mechanisms. It appears to me that the last El Nino is spooking them; we should only judge recent years after the next one has appeared.

One thing does puzzle me – has anyone explained the pause in temperature rise that began around 1940? I would have thought the wars would have emitted more CO2, rather than less.

Humans are very wired to ‘see’ patterns. That is why statistics often provides better data. That is also a reason why statistics, even when competently and validly performed, arouse such negative emotions.

Even ‘respectable scientists’ are human!

I don’t have the reference on hand, but I have read in govt sources that part of the 1940s data may be contaminated by a change in the way sea water temps were recorded on ships. I would certainly wonder about coverage on both land and sea as well, though I have not seen that addressed, personally.

Of course any correcting done now even if actually correct would simply amount to further ‘tampering’ in the service of the worldwide conspiracy to hoax the public into maintaining the lifestyles of climate scientists.

I, too, was surprised when respectable scientists started looking for pause mechanisms.

Then you are making the same thinking mistake as Judith Curry.

Just because the trend line did not change does not mean that there are not reasons for deviations from this trend line.

Just because the relationship between temperature and CO2 did not change does not mean that there are no further relationships.

How can the pause be both ‘false’ and caused by something?

Notwithstanding any change in CO2 emissions, annual average atmospheric CO2 appears to have declined very slightly during the war years, from 310.3ppmv in 1939 to 310.1ppmv in 1945 (data from, I think, Law Dome ice core, Antarctica).

Yes, it’s an interesting question, and I’ve seen various explanations proposed. I put a bit of time into it this morning, but whiffed on most of what I hoped to find. In particular, I remember Hank Roberts posting a study of oceanic drawdown of CO2 during the period of the war, as a result of the near-cessation of fishing during hostilities. Maybe Hank will repost that for us, if he sees this comment.

However, I did find this: emissions–as opposed to concentrations, for which I didn’t find data–apparently didn’t change that drastically during the war. CDIAC has reconstructed data on emissions, going back to 1751 (obviously, with varying uncertainties over time.) Downloading the numbers for 1930-1960 and popping them into Excel to make a quick and dirty line graph yielded this curve:

You can see a fair drop at the beginning of the period, which makes sense since that was during the early years of the Great Depression, and then again in 1945, following the then emissions record in 1943. The decline is perhaps at least partly explainable in that hostilities ceased in Europe fairly early in 1945: May 8, to be exact. So the largest ‘war effort’ ceased after a third of the year. And Japan’s surrender followed on August 15 (though the official signing wasn’t until September 2), eliminating that ‘war effort’ for an additional third of the year.

It’s interesting to note, though, that the CDIAC breaks down CO2 sources, and the big difference between ’45 and previous years in that regard is that emissions from solid fuel–coal–dropped 227 megatonnes, more than accounting for the total drop of 223 megatonnes. Liquid fuel contributions held steady while gas increased.

Anyway, without actually analyzing the curve, I’m betting that that short-lived anomaly wouldn’t affect the obvious growth trend significantly.

Hmm, that link seems to have embedded. Sorry, Tamino, didn’t expect that. But at least it’s not a video. ;-)

Aerosols? Improved regulation of air quality kicked off in a big way in Western Europe and the US in the early 70s.

Incidentally, has there been any quantification of the effect of aerosols from the increased industrialisation of China and India over the past decade?

Trenberth seems to accept that the PDO is possibly the better explanation for the cooling that started around 1940, which means it may also be a partial explanation for the warming that began in the 1970s.

Your concluding points seem impossible not to agree with, based on this excellent analysis! And I do not feel for disagreeing, either…

Very good points of caution, for anyone talking about a slowdown/hiatus.

You refer to the analysis on Realclimate, which includes a change point analysis of the GISTEMP 1880-2014 by Niamh Cahill. This analysis shows change points in the data in app. 1912, 1940 and 1970.

I think this is the reason why even reasonable people are looking out for some kind of change point around 2000. And with another 15 years of data, analysis of the 1880-2030 data may show such a change point. But it certainly is not there in the data now, as Cahill shows, and you demonstrate, very thorougly and clearly.

I agree that studies on the possible trend change would tend to learn us more about fluctuations than about the trend. So far, I think the existing change points are not well enough explained. Certainly not by the “cyclists” who normally just assume some kind of cycle by eyeballing graphs, and talk loosely about AMO/PDO without showing any analysis. But not by climate science either.

Both Trenberth and Hansen have mentioned the PDO with respect to the 21st-century surface air temperature’s behavior.

Thanks, most useful.

Another point I like to make, using the WoodforTrees website, (with which most folk seem comfortable), is that for nearly all of the time since 1970 it has been possible to fool oneself into saying that “the earth is cooling” whilst somehow it just keeps getting warmer:

http://tinyurl.com/p9nf47r

This, of course, is similar to the Skeptical Science escalator, but since it is a do-it-yourself version it may be more trusted by those afflicted with conspiracy theories.

I have tried to make this same basic case–in far less depth than you here–to deniers with an engineering background. It never, ever, ever works. Engineer deniers seem to be among the most rigid people out there.

The most charitable explanation I can think of is that it is because the concept of natural variation without an imputed cause is completely foreign to their mindset.

As well, I see some physicists making this same–apparent–cognitive error as you point out. It seems basically a holdover from 19th century deterministic thinking. These people just don’t seem to be able to wrap their heads around random error.

I further agree that there may be something left to explain, but as you say it will most likely explain cyclic variations not trends. These are physically interesting and important to investigate, but they are not central to the concept of warming over multidecadel spans where the cycles will sum to zero. But of course deniers will submit that if there is not 100% explanation down to the most detailed level of every ‘wiggle’, there is effectively no explanation.

Fair go jgn. I’m a civil engineer with 30+ years experience and I wrote this:

http://gergs.net/2014/02/pause/ … and this:

http://gergs.net/2014/03/warming-accelerates/

My stats are rudimentary, but my conclusion is Tamino’s. On the whole I’d say engineers are more swayed by clear evidence than most, and understand variability better than many. How do you think that floor that’s holding you up was designed?*

I find that the undoubted problem with engineer acceptance of AGW has more to do resistance to engaging the evidence than with any general failure to appreciate variability. That’s unfortunate, because actually doing something about climate change is an engineering task, not a scientific one. (Science is about understanding; engineering is about doing … and I don’t mean the stupid tinkering of ‘geoengineering’.)

(* With a good physical understanding plus a factor of safety of course. But what’s that factor? It’s just an allowance for uncertainty and variability — the overlap of a load distribution and a structural resistance distribution.)

Any post of yours is an antidote for the fatigue experienced by those of us who attempt to combat the misinformation out there .

Clearly explained statistical analyses with a wry wit superimposed.

Thank you.

if you take a data set which is going up like GISS you will have no trouble in proving your point that there is an upward trend.

If you were to take other data sets which do not show this degree of warming at the end you would have to adjust your trend.

I am unsure what data change and for how long it would have to change for you to consider that a pause could be taking place in the GISS set,

obviously not one now.

Good to see you back commentating, It was getting a trifle dull without you.

If there is an ounce of genuine skepticism in you, then data sets that show the surface air temperature anomaly is not going up while sea level continues to rise and ocean heat content anomaly continues to rise and the SST anomaly continues to rise are very suspect.

Then you find an RSS scientists who says the thermometer data sets are more accurate for measuring the surface air temperature.

I think anyone picking on differences in various temperature records should seriously consider this graph:

http://woodfortrees.org/plot/gistemp/mean:13/from:1979/plot/hadcrut4gl/mean:13/from:1979/plot/rss/mean:13/plot/uah/mean:13

And maybe this one, too:

http://woodfortrees.org/plot/gistemp/mean:13/from:1979/plot/hadcrut4gl/mean:13/from:1979/plot/rss/mean:13/plot/uah/mean:13/plot/best/mean:13/from:1979

Doc – I think these two graphs explain why skeptics are so in love with the satellite series. It allows them to believe that “adjustments” to thermometer record are behind the recent increases in GISS, and it sounds very credible to suggest satellites are more accurate.

GISS since 2010

RSS since 2010

Yes, apparently the smaller the cherry, the sweeter the taste.

if you take a data set which is going up… you will have no trouble in proving… there is an upward trend

Is there something missing, or is this tautology the heart of the comment?

There is also the excellent SkepicalScience trend calculator, based on the work of Foster and Rahmstorf (2011).

Foster sounds familiar…

I recall being upset with this Foster guy for stealing Tamino’s ideas without giving him any credit. Then I remembered Tamino is a pen name…

I’ll stick with my layman’s explanation: If you don’t simply look at the global mean but at the values by season and hemisphere, it turns out that temperatures have risen steadily on the southern hemisphere and on the northern hemisphere in summer. In winter in the north there has been a pronounced decrease (due tue La Ninas etc.). So if you calculate the mean over tree values that go up and one that goes down, the global mean might suggest something different that what is actually happened.

If you find out that three rooms in your house are getting warmer and one is getting cooler, you probably wouldn’t conclude that the heating is defective. You’d probably simply have a look in the fourth room what’s going on.

This may be a gross violation of everything that’s dear to a statistician – but it’s proved to be extremely effective against your assorted climate ‘skeptics’ troll…

I’ve been meaning to write something on this for a while. I find Tamino convincing: if you look over the full period then there’s no slowdown. If we accept things energy conservation and the concept of a mean/background/climatological temperature, then _all_ of the extreme hiatus claims rely on allowing the temperature at the beginning of the hiatus to take any value.

But we can estimate the background temperature from the longer term trend (say, 1970 until whenever you think the hiatus starts). This is what Tamino and the change point analysis do. All of the extreme hiatus claims result in a magic jump in Earth’s background temperature just before their claimed hiatus began, which is hidden by only looking at post-1997, 1998, 2002… whatever data.

The argument is fundamentally over this. The more extreme hiatus claims must either reject the idea of a background temperature, or must believe in a magic temperature jump just before their chosen period began.

BUT… the purported hiatus period is still interesting. Continued constant trend (which Tamino shows fits the data) is not consistent with the CMIP5 mean. Recent temperatures are at the low end of the CMIP5 envelope which raises fair questions about their performance and what it means for future warming projections. That’s why papers like Kosaka & Xie and England et al. are such valuable scientific contributions.

I believe that there are 60-year cycles involved (IPO, MPO, AMO, whatever), altering the distribution of heat between air and ocean.

That doesn’t contradict AGW, it just superimposes on it.

If you want to remove annual variation, use a 12-month running mean.

If you want to remove 60-year natural cycles, use a 60-year running mean.

http://www.woodfortrees.org/plot/gistemp/mean:720

Until the day this curve starts to curve in the other direction, I would not speak of a “hiatus”…

Hi Tamino! So good to have you back and showing off :) This post is impressive, even for you. Thanks!

> pause in …. 1940s

Much studied by the fisheries ecology folks, though it doesn’t seem to have been possible to include in the climate models yet.

Fishing and whaling all but stopped in the Atlantic during the war, and the large animals (and the ecosystems for which they were top/controlling predators) recovered dramatically. Remember it’s top predators that keep an ecosystem complex; kill off the top and you get a trophic collapse. That had begun before the war. That’s happened, since the war. But if we could quit stripmining the ocean for a decade, it would still recover.

That’s a huge amount of carbon going into everything from plankton on up to large fish and whales.

http://www.academia.edu/4055383/World_War_II_and_the_Great_Acceleration_of_North_Atlantic_Fisheries

Thanks, Hank. Hat-tipped in advance, if you noticed.

I guess I forgot, but what, besides a flawed 1998 starting point, was/is the reason for considering/suspecting a pause in the first place?

I was convinced when I read the Rahmstorf piece on Real Climate that there was no basis for claiming a slowdown or hiatus (he cited Tamino at the end of that presentation). And now this tour de force. Yet, the latest issue of Nature Climate Change has a commentary by England et al. on the “recent hiatus.” I think giving in and repeating this false meme is very damaging. Thanks for this analysis. Incidentally, didn’t I read someplace that Ross McKitrick published a peer reviewed analysis showing no warming since 1995. If so, a review of his analysis would be useful.

[Response: There is this]

Thanks. Just started reading Tamino regularly. I should have looked.

When measuring to within a hundredth of 1 degree the trend becomes “statistically insignificant”

I also have trouble buying the idea of a warming slowdown, for more or less the same reasons Tamino does (although my statistical methods are cruder). A while back I thought I’d take a quick look to see for myself if I could find a slowdown in the warming trend using the cherry-picked start and end dates favored by Bob Tisdale and company. This is what I came up with: http://i.imgur.com/IDWxCtL.png http://i.imgur.com/qXrh71d.png http://i.imgur.com/bSInjLa.png I know there are better techniques for analyzing time series, but simple OLS is good enough to show that any (statistically insignificant) change in the trend that may have occurred is of rounding-error magnitude.

The other day, just out of curiosity, I combined the HadCRUT4, NOAA/NCDC and GISS LOTI data sets, ran a regression on them, then checked to see how many statistically significant powers of the fitted values I could add as explanatory variables to the regression equation. I’m sure this is some form of statistical malpractice, but I thought the chart the procedure produced was interesting: http://i.imgur.com/JtjOopp.png. I couldn’t help noticing how similar the regression line looked to this regression line (annual temperature anomalies regressed on the annual atmospheric co2 concentration) http://i.imgur.com/2ZkFRsv.png?1

It’s relatively easy to conclude that there was a “pause” or “slowdown” if one only considers the central trend values and ignores the uncertainty.

Very ironic that Judith Curry has been one of the ones doing this, given all her claims that others were not properly considering uncertainty.

Yeah. Raising a huge clamor about the uncertainty of the 2014 annual anomaly and totally ignoring the uncertainty about the ‘pause’ is so very consistent. Consistent in terms of always finding a way to misinform/disinform.

Curry gives one reason for pause.

or maybe it would be more accurate to say that “Curry is cause for pause”

And unjust cause, at that.

But curry is hot. How can it cause a cooling pause???

Interestingly, Curry is both unjust and legitimate cause for pause.

A sort of stuporpausition

Well, you provide a quantum of solace, at least.

A pause, a pause, my planet for a pause!

OT, but I hope you’ll take a look:

Curious election statistical observation

http://www.kansas.com/news/politics-government/article17139890.html

http://catless.ncl.ac.uk/Risks/28.59.html

_____excerpt______

… an unexplained pattern that transcends elections and states. …

Clarkson, a certified quality engineer with a Ph.D. in statistics, said she has analyzed election returns … that indicate “a statistically significant” pattern where the percentage of Republican votes increase the larger the size of the precinct.

… In primaries, the favored candidate appears to always be the Republican establishment candidate, above a tea party challenger. And the upward trend for Republicans occurs once a voting unit reaches roughly 500 votes.

“This is not just an anomaly that occurred in one place,” Clarkson said. “It is a pattern that has occurred repeatedly in elections across the United States.” …

Jgnfld,

You talk about engineer sceptics not getting the point Tamino is making.

I’m an engineer, I started off with the roll out of TACS in the UK, moved on through industry, and now I’m in process of taking over the management a calibration laboratory. That means I am responsible for spotting problems, pulling kit out of service and investigating, or leaving it in service and keeping a close eye on it. The buck stops with me. The consequences can be serious if I screw up and leave test standards in service against which industrial equipment is calibrated, when those standards are flawed. What do I mean by serious? Planes ploughing off the runway in fog because we muffed up the calibration of some glide slope / localiser beacon test sets. Train wheels shattering at high speed because we screwed up calibration of an annealing oven. etc etc etc

You get my point?

So how do you think I approach minor aberations in data? Do you think I wait until they become statistically significant? By which time my company may be named in a accident investigation report. Or do you think I get twitchy fingers and react when I see the numbers skewing?

I get what Tamino is saying, I really do. To do my job I need to be able to assess uncertainty, a statistical excercise, so I get it. But here’s the problem. I look at this graph (NASA GISS LOTI):

And I see a levelling.

I can’t think of an engineering analogy involving a long term time series. But if that was my lab’s turn over, and the years were month numbers, then by now I’d be looking into why turn over had levelled (actually I’d have the answer by now) and what I was going to say to the MD when he popped in for a chat about it (I’d have that too – I’d probably have emailed him already).

So by now you may be thinking ‘yeah, yeah, another denialist’. Far from it, a denialist wouldn’t write a blog post like this:

http://dosbat.blogspot.co.uk/2014/07/that-no-warming-since-1998-bollocks-meme.html

In my job I am guided by the data. I have decades of experience of pulling the plug before I am statistically confident. One might dismiss this, but my experience has taught me that deviations below statistical significance often contain useful information, information that avoids expensive f–k ups. The flip side of this is – pull the plug often without reason and you get the sack. Experience teaches the balance between those extremes.

There are denialists grasping for any items of flotsam because their ship is sinking. Some of them may be engineers, although in my experience, when challenged most of them claiming to be engineers reveal no more of a brush with engineering than the average car driver or mobile phone user. However there are those of us, non scientists, from disciplines such as engineering who see a levelling in data such as the graph I linked to above. We get the mathematical argument, but we see something that would make us take notice in our jobs.

[Response: I quite agree that results don’t need to pass statistical significance to be worthy of investigation, sometimes even action.]

Your entire ‘contention’ seems absurd in the light of what is actually discussed here. You are comparing statistical trend anomalies from baseline to exception with exception to baseline. This train is already off the tracks, remember?

Not quite the same thing, but–

Some years ago, my wife and I found ourselves paddling a canoe downriver after dark (there was this dam that wasn’t on the map… ahem.)

Anyway, there were undercut trees on each bank, virtually parallel with the water’s surface. Inconvenient and potentially dangerous to hit them–and hard to discern in the dark. You could hear them before you could see them.

After the first collision with one, I reasoned that it was better to react promptly–that would enable one, in theory, to miss 50% of the ‘strainers’ at least. Slower action would ensure hitting them all.

To my complete surprise, we didn’t hit another one. Though subjectively it felt that I “couldn’t tell” which way to go, clearly something in me knew perfectly well.

My personal epistemology has never been quite the same since.

I guess what I’d say here are 2 things.

1. I get what you’re saying to some degree in terms of identifying a point failure in a system.

2. BUT, any remotely quantitative examination of the surface temp record will show these nonsignificant “pauses” are quite common in the record. There are references to this above (see Slioch) and the skeptical science escalator is another example. So the present behavior in no way varies from previous behavior. That is, you would have seen nonsignificant “flattenings” repeatedly over the past decades. Plus, starting your examination from a 2-3 sigma deviation depending on series totally biases any notion of “flattening”. The question then becomes: In what way do you propose that this “flattening” is different from all the previous “flattenings”?

As a statistically-minded cognitive psychologist by training rather than an engineer by training like my denier brother(!), I would submit that the odds are you are “seeing” an illusion, not a “flattening”.

jgnfld,

I think you are carrying over an assumption here. That assumption is that someone ‘seeing’ a pause then necessarily proceeds to see a cessation in GW. ‘Pauses’ or ‘drops’ at the end of a time series are likely to be interpreted by humans as breakpoints to a new regime, levelling or falling. Such reasoning is flawed.

The question of whether the forced trend is demonstrably undergoing a breakpoint is best answered using the techniques Tamino employs, of course the answer is a clear ‘no’. And even though Tamino does carry the bias far, for the power of demonstration, allowing biasses that support the notion of trend change is not unreasonable when acting conservatively (provided those biassses are kept in mind when considering the result). Here by ‘conservative’ I am thinking in my terms as someone who sees new behaviour as potentially problematic.

I contend that every single deviation from the trend in every year contains information, they are _not_ illusions. If one changes perspective to the interannual differences in temperature, one could embark upon examination of these differences with the trend effect minimised, and try to explain them. My hobby is sea ice, I find it fascinating, I cannot explain every up and down totally but I am able to explain most variations from the trend that I have considered and they are not illusory.

We live in a causal universe governed by physical laws, things do not ‘just happen’ at the large scale of planetary temperature.

Not a “flattening” that represents anything new is occurring, that is.

Things may not “just happen”, i.e., truly be totally deterministic ( position I generally hold), yet still be beyond prediction if the underlying phenomena are chaotic in nature. A simple compound pendulum is deterministic yet unpredictable. You simply cannot tell me exactly when it will “flip” after sufficient time has passed. The same may well be true of climate “flips”.

Yes, there is still information in the ‘wiggles’ there and statistics is one good way of extracting that information. But all you will get is a probability statement, not a deterministic prediction. And when flips occur, you may really be only talk about them in hindsight…as in examining those specific model runs where the specific pattern we are seeing is found rather than looking at the ensemble mean of all models.

The (possible) illusion I refer to then is the possibility that it is illusory to think that there is a systemic, deterministic change happening that can be understood in a straightforward, if-then, causal way. That simply may not be possible as scientists in a number of fields have become aware of each in their own fields for well over a century now.

jgnfld,

But in the case of the compound pendulum it still encompasses a space within which it can vary, and if I add gravitational energy to it (pick it up and put it on the table) that space changes – to a higher level.

I agree that statistics are important, and that absent a strong physical mechanism to suggest the end of AGW (there is none), statistics are the only way to determine the end of a trend. All I am saying is that statistical uncertainty is not the answer to the question ‘is there a levelling in the data?’. Levellings can occur in a trend plus noise.

Anyway, if you will excuse me. I am too busy to continue this discussion, sorry.

I very agree with what you say here in your last statement. To my mind at least, I don’t think that statement totally agrees with your earlier ones which involve what appears to me to be thinking more along the lines of identifying point failures rather than describing whole solution spaces.

Chris says “All I am saying is that statistical uncertainty is not the answer to the question ‘is there a levelling in the data?’. Levellings can occur in a trend plus noise.”

When most people talk about “flattening” or ‘pause” with regard to the temperature data, they are actually referring to the trend.

And i would say that statistics which take the uncertainty into account are the only way that one can really answer the question whether there is actual “levelling in the data” — ie, whether the trend has changed significantly (statistically speaking) from what it was previously or whether the “levelling” is only apparent (due to the noise).

“I contend that every single deviation from the trend in every year contains information, they are _not_ illusions.”

No, that is flawed Chris. There are flattenings here, made with a random number generator and a crude model of the monthly temperature series autocorrelation: http://gergs.net/2015/01/frequency-records/ (one is highlighted and discussed in the text).

Such features contain information only in the sense that weather is information … which of course it is … but not in the context of discussing climate.

Hi Chris. I am not in disagreement with any of your points. It is also true that for electrical engineers (at least), the concepts of signal and noise are standard fare, so much so that there are elegant theorems relating the two (http://en.wikipedia.org/wiki/Shannon%E2%80%93Hartley_theorem). The idea of a forced trend (the signal) over which is superimposed other influences (the noise) is not a stretch at all from an engineering perspective. Does the analogy hold? For a true sceptic, that is a subject for analysis (cue Tamino) rather than dismissal out of hand.

Ammonite,

As is clear from my comment above, I’m not dismissing Tamino, I’m trying to explain why engineers (denialist bias aside) may have a different perspective. Yes, Shannon Hartley is entirely relevant.

Your comments are very clear and concise Chris and I am not in any way accusing you of “dismissal”. Rather, it is an attitude commonly expressed by bloggers *claiming* engineering expertise.

You may want to have a look at my comment upstream. You might just look on data that give you a wrong impression what’s happening. If you look at the data by hemisphere and season (such like in figure 4 at page 6 in the following paper)…

http://www.columbia.edu/~jeh1/mailings/2014/20140121_Temperature2013.pdf

…you can see no such thing as a ‘leveling’ in three of four datasets. And the fourth one is a decline, not a leveling. Which simply means that the aggregate figure gives you the impression of a leveling – just that it’s basically an artifact of the calculation you’re using, not a reflection of what’s actually happening (…which is – since this graph is about the atmosphere – that warming continues, because ice and permafrost is melting and oceans are warming – all of which is energy that’s no longer available for warming the atmosphere).

Bottom line: As an engineer, you’re probably accustomed to breaking the problem down into more detailed aspects, once the data don’t pass your smell-test. Same here…

Random,

Same thing happens in sea ice data, and there the hunt for mechanism begins. Note that in the blog post I did on this subject (see my initial comment) I did reference Cohen’s work on Boreal winter cooling. Now I am also aware that the land ocean temperature index is not the whole story, ocean heat content is increasing. However consistency is important, I’ve used GISS for years and continue to use as a ‘pretty good’ index.

Many (if not most) of the people claiming that temperature has paused” (slowed down, flattened, etc) do so with the purpose of “proving” that climate science is wrong. For example, we hear “Well CO2 kept going up but temperature “flattened”. (hell, you can just “see” it on the graph!)

That sort of proof requires a higher standard (2-sigma at least) than simply a “hunch” that something has happened.

This is particularly true given what scientists know about the climate system (about the greenhouse properties of CO2, about brief “pauses” in the temperature record that turned out not to be, etc) and given the fact that in the case of climate, not doing anything is not an option.

What I find most “interesting” (telling, actually) is that many of the people who claim a “pause” without meeting the significance standard are the very same ones who yap endlessly that there has been no “significant” warming over the last 15 years. So scientists have to meet the standard, but they don’t. How convenient.

Finally, Chris’s argument above is actually the inverse of the climate situation. He is proactive when he perceives a change in the data below the usual standard of significance because to do otherwise could be disastrous. Climate change deniers use the supposed flattening” below the standard of significance as an argument to do nothing. But doing nothing is almost assuredly going to lead to disaster.

It is not that the rate of surface warming has not changed in the short therm. It has. It does frequently. The question is whether the change is sufficiently significant to indicate a change in climate. It hasn’t . Not even close.

I suspect the issue is that if your production data were as “noisy” as the temperature data, THAT is what would attract your attention. Long before you saw such a plateau, you’d be asking, “Why is production so inconsistent?”

Indeed, that is what the scientists are doing. The science of greenhouse warming and CO2 is not exactly cutting edge. Yes, we want to understand feedbacks better, and yes, we want to nail down system response. However, climate scientists are now more interested in processes that for the purposes of climate change are “noise”.

Remember the old saw about the accuracy of economic predictions–they’re so accurate that they’ve predicted 10 out of the last 5 recessions. I suspect that if your data were so noisy, and you responded to every fluctuation that seemed suspicious, your employer would fire you for interrupting production too often.

That is the really sad thing about the denialists–they’re too dumb to even know where the action (and the uncertainty) lie.

Reblogged this on A few words from Nintinup … and commented:

Simply clear graphs. …

What if you adjust the temperatures of the years which were cooled by the Chichon and Pinatubo eruptions?

see also: http://www.nature.com/ngeo/journal/v7/n3/fig_tab/ngeo2098_F1.html

what they are seeing

RSS – yellow trend line

GISS – red trend line

I do not quite see how it is going to work to tell people they are not seeing this. Whatever it is, it is lowering 30-year trends. Despite 2010 and 2014 being record warmest years, the 30-year trend is getting smaller (this lessening is losing steam quickly and may soon reverse itself).

[Response: Odd to talk about “lowering 30-year trends” while referring to a graph that only shows 20 years. Also odd to talk about “seeing this” when one of the main insights of statistics is that “looks like” is such an easy way to fool yourself.]

You can ask anybody who follows Climate Etc., I am not fooled by the flat lines. My position is a lot of very intelligent people have been completely fooled by the pause.

To tell people they are not seeing flat lines is fraught with problems. They are going to think they’re being insulted. Especially given the acknowledgement of a hiatus from scientists like Hansen and Trenberth, and organizations like the IPCC. and the long list of papers trying to explain it.

increasing and declining trends during the period included in the ‘no warming for 18 years’

30 years to present, slope = 0.0164865 per year

30 years thru 12/31/2014, slope = 0.0165444 per year

30 years thru 12/31/2010, slope = 0.0186036 per year

30 years thru 12/31/2005, slope = 0.0188261 per year

30 years thru 12/31/1998, slope = 0.0158677 per year

So: .16C/.19C/.19C/.17C/.16C – still well above the trend thru 1998.

Exactly so. You can’t successfully tell folks they ‘aren’t seeing something.’

You can, however, point out that there are other things to see, which have quite different implications. For instance, adjusting the ‘start dates’ of your original graph later by one year paints quite a different picture:

http://woodfortrees.org/plot/gistemp/from:2003/trend/plot/gistemp/from:2006/trend/plot/gistemp/from:1995/mean:3/plot/gistemp/from:1995/trend/plot/gistemp/from:2003/mean:3/plot/gistemp/from:2014/plot/rss/from:1999/trend/offset:0.35

Then the question becomes, what is the meaning of these two apparently differing images? How is it that a ‘cooling trend’ turns into a ‘warming trend’ after just a year?

The answer, of course, leads right back here–to the Society For The Prevention Of Statistical Abuse.

Given the uncertainty attached to all those values, I think it would be most accurate to say that they are not significantly different from one another (in the statistical sense).

PS You might want to check your last (ten-millionth’s) digit on those values. But just the last one. :-)

kevin

I think SPCN also works: Society for Prevention of Cruelty to Numbers.

After all, it’s really not fair to use statistics to torture numbers at will every day — Blackboarding, chasing up Bishops hill, Auditing, and worst of all WUWTing (drawing and quartering of graphs — a cruelty so unspeakable that not even ISIS has resorted to it)

JCH, a variation on the theme of your graph is demonstrated here:

http://www.skepticalscience.com/resolving-met-office-confusion.html#89481

Should probably update the graph at some point – it’s been years that now I’ve been poking at those who proclaim “hiatus”…

This leads me to a game I’ve played with several different deniers recently. The narrative is pretty much the same, and starts with their statement that “there’s been no warming for 15/17/18 years”. To reduce things to their simplest I say “but last year was the hottest year on record, so it must be warming”, to which they inevitably try to counter with something effect of “but, variability”. Which is of course exactly what I want them to say…

So when I respond with “ah, variability, that must mean that you need to have enough data to detect the signal from the noise – what period of time provides sufficient data to identify either a real increase or a real pause from the variability in the dataset?” It’s at about this point in the proceedings that they realise that they’ve walked themselves into a trap, and that the answer invalidates any claim of a pause: I’ve never had anyone attempt to continue the conversation beyond this stage.

It once almost came to blows with a drunken aquaintance though…

Pretty much the same conversation happens on blogs, but without the satisfaction of seeing the hunted looks in their eyes when they realise that they’re screwed.

Bernard, nice tactics.

I suppose that’s why some now refuse to accept that the surface record has any validity. As Rob H pointed out elsewhere, if this Nino turns out to be big, they’ll be fresh out of data sets.

Global warming is “global”. Look at the whole globe- not just the surface. Most of the heat goes into the ocean. More heat in the globe, and sea level rises.

Sea level rise has not slowed down. There is no pause in global warming!

Surface and atmospheric temperatures are inherently noisy. If one is going to study global warming by using surface and atmospheric temperatures, then you need a VERY good statistician.

Consider, an increase in water vapor content of the atmosphere will increase the heat content of the atmosphere as much as a rise in temperature – and global warming is total heat, not just some dry air temperature. The last few years have been warm, and there is more water vapor (heat) in the air. Not just in the tropics, but everywhere. This last winter it snowed repeatedly across the Arctic. It did not snow in the Arctic in 1998 – because there was not enough water vapor in the air over the Arctic. That is, in 1998, the Arctic was still too cold (not enough latent heat) for substantial and extensive snow.

There has been no pause in warming! Both the latent heat and the sensible heat of the atmosphere have increased. Together they have maintained the trend in atmospheric warming. When all we have is the temperature, and lack the latent heat data, then we need a VERY good statistician.

IMHO, it’s all about the definition of significance levels. If somebody is content with low levels like .35 or so, he or she can find as much trend changes as he or she wants.

BTW, there is no such thing as “the real trend in warming” out there.

The real trend is exactly, not more and not less, what we define it to be in terms of smoothing algorithms. Just like there is no such thing as “intelligence”. Only what we measure in IQ-tests.

@ Aaron Lewis: good point, to consider the heat content not just the temperature.

Horatio, you nearly freeze my blood. However, you did forget one (or perhaps were too delicate to mention it.)

I refer, of course, to Brenchlification, in which the trend is crucified, inverted. Some scholars think this is the same as “Easterbrooking”, but that appears rather to be a euphemism for simple decapitation.

*It’s ironic that quite a different Curry comes into this story.

Must be the Lordzheimers kicking in.

Thanks for reminding me (Not!)

I am off-topic, but… my 2nd article in a peer-reviewed science journal will appear this month:

Levenson, B.P. 2015. Why Hart Found Narrow Ecospheres–A Minor Science Mystery Solved. Astrobiology 15, 327-330.

A hoax, surely.

Congratulations.

Well, you’ve doubled my count :-p

Minor suggestion, segregate the monthly data by seasons and then treat each as a separate data set over the 45 year period since 1970. Compare and contrast.

Changes are more likely to be between seasons than annual.

Pertinent article by Stephan Lewandowsky in Global Environmental Change

Seepage: The effect of climate denial on the scientific community.

……

In our article, we illustrate the consequences of seepage from public debate into the scientific process with a case study involving the interpretation of temperature trends from the last 15 years, the so-called ‘pause’ or ‘hiatus’. This is a nuanced issue that can be addressed in multiple different ways. In this article, we focus primarily on the asymmetry of the scientific response to the so-called ‘pause’—which is not a pause but a moderate slow-down in warming that does not qualitatively differ from previous fluctuations in decadal warming rate. Crucially, on previous occasions when decadal warming was particularly rapid, the scientific community did not give short-term climate variability the attention it has recently received, when decadal warming was slower. During earlier rapid warming there was no additional research effort directed at explaining ‘catastrophic’ warming. By contrast, the recent modest decrease in the rate of warming has elicited numerous articles and special issues of leading journals and it has been (mis-)labeled as a ‘pause’ or ‘hiatus’. We suggest that this asymmetry in response to fluctuations in the decadal warming trend likely reflects the ‘seepage’ of contrarian memes into scientific work…..

4. Is the research on the “pause” wrong?

No. On the contrary, irrespective of the framing chosen by their authors, all articles on the pause have reinforced the reality of global warming from greenhouse gas emissions, and this body of work has yielded more knowledge of the processes underlying decadal variation. None of this work has come to the conclusion that the physical processes underlying global warming are somehow in abeyance or that prevailing scientific conceptions of them are incorrect.

However, by accepting the framing of a recent fluctuation as a ‘pause’ or ‘hiatus’, research on the pause has, ironically and unwittingly, entrenched the notion of a ‘pause’ (with all the connotations of that term) in the literature as well as in the public’s mind. Some of that research may therefore have inadvertently misdirected public attention.

http://summitcountyvoice.com/2015/05/08/is-there-a-global-warming-pause-or-not/

I admire and appreciate the objective, detailed analysis. Thanks.

Please forgive a moment of OT. Horatio is back, made my day. Oddly, I was recapping one of his creative works to the tune of Sam Cooke just this afternoon when I got the notice of a new Tamino post and took a look.

This was Horatio’s take, which with 20:20 hindsight I think was meant to reference cosmology rather than cosmetology, though there’s far too much cosmetology about these days:

https://tamino.wordpress.com/2011/06/28/skeptics-real-or-fake/#comment-52095